For years now, I’ve preferred to do all my development on a dedicated machine. This means my desktop (or laptop, depending) is just a glorified terminal-and-editor-running appliance. It probably comes from years of crashing boxes during kernel development, but it is also relevant for something like Nova, where running unit tests will consume all resources. The last thing I want is for unit tests to slow down my email and web browsing activities while I wait for them to complete.

A few years ago, I started automating the sleep activities of my development machine. There’s really no reason for that box to run all night when I’m not using it, especially since it’s beefy (and thus power-thirsty). I used to sleep the machine on a schedule which was mostly compatible with my work schedule. Lately, I’ve been using the idle times of any login sessions to determine idle-ness and sleeping the box after they pass some threshold. My only-works-for-me hack looks like this. This behavior is pretty handy, because once I wake it up, it stays up until I stop using it. At the time I started doing this, I would just have my always-running workstation machine wake it up right before I normally stop working so the box was ready for me in the morning. By putting the MAC of the development box into /etc/ethers, all I needed to wake it up was:

$ sudo etherwake theobromine

The problems with this are:

- When I’m traveling or on vacation, the workstation keeps waking the development box for no reason, unless I remember to disable the cron job.

- If I’m in another timezone accessing the development box remotely, it’s not online at the right times.

- If I needed to wake the machine off schedule, I needed to ssh to an always-on machine on the network and wake it up.

- Once I moved my desktop to a platform that can reliably suspend and resume a graphical environment, I stopped having an obvious place to run the wake script.

Since the development box sleeps according to lack of demand, what I really wanted was a similar demand-based policy for waking it up. Given that I only access the machine over the network, all I really need was to monitor the network from a machine that is always on, looking for something trying to contact the box. If I know the development box is down and I see such a request, I can issue the wakeup packet on behalf of the demanding machine without it having to know about the sleep schedule. I ended up with this. The logging looks like this:

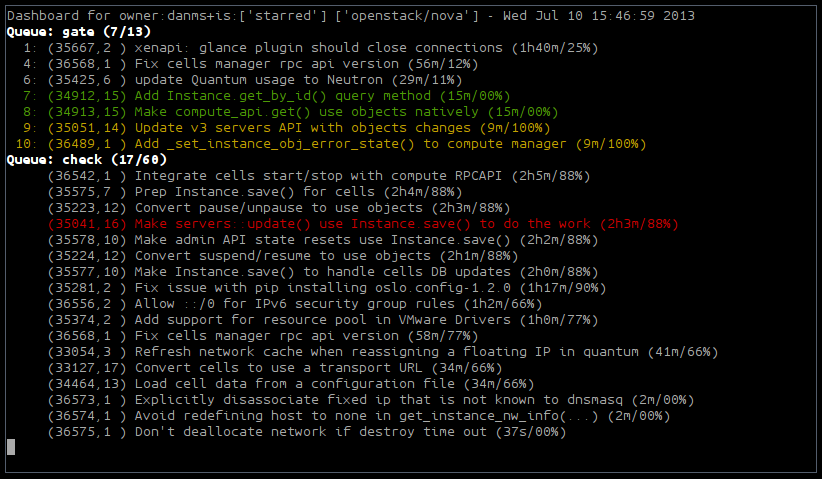

2015-01-30 16:04:43,641 INFO Pinging theobromine [192.168.201.150]: Alive: True (46 sec ago)

2015-01-30 16:04:43,658 INFO theobromine seen alive (since 46 sec ago)

<sleep occurs>

2015-01-30 16:06:22,710 INFO Pinging theobromine [192.168.201.150]: Alive: False (94 sec ago)

2015-01-30 16:25:21,065 INFO Pinging theobromine [192.168.201.150]: Alive: False (1232 sec ago)

<attempt to ssh to theobromine from desktop>

2015-01-30 16:26:36,511 DEBUG ARP: 00:01:02:03:04:05 192.168.1.58 -request-> 00:00:00:00:00:00 192.168.1.20

2015-01-30 16:26:36,511 WARNING Waking theobromine

2015-01-30 16:26:36,889 INFO theobromine seen alive (since 1308 sec ago)

Now, any traffic destined for the development box that generates an ARP request will cause the always-on machine to issue a WoL magic packet to wake it up. That means reconnecting via SSH in the morning, making an edit via Emacs/Tramp, or even just a ping. Aside from the delay of a few seconds when the machine needs waking, it almost appears to the user as if it never goes to sleep.